Table of Contents

sampo.uef.fi

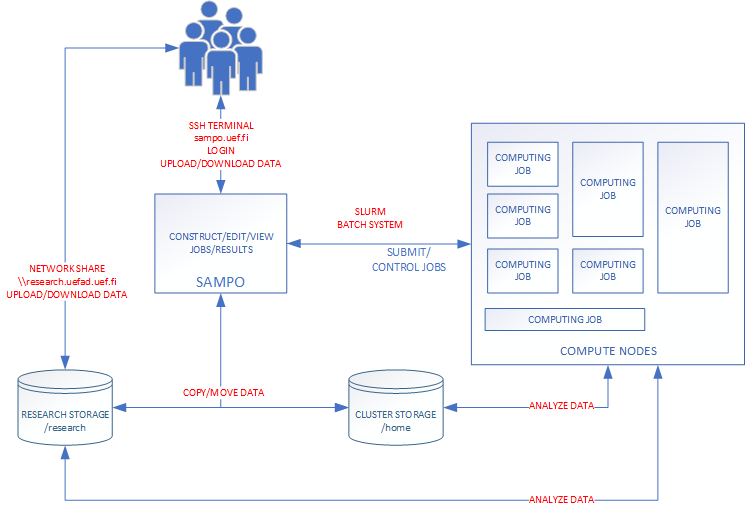

Sampo.uef.fi is High Performance Computing (HPC) environment running with the Slurm workload manager. It was launched in autumn, 2019 and is targeted at a wide range of workloads.

Note: The login node (sampo.uef.fi) can be used for light pre- and postprocessing, compiling applications and moving data. All other tasks are to be done using the batch job system.

Specs

In addition to the login node (sampo.uef.fi) the cluster has a total of 4 computing nodes. Each node is equipped with two Intel Xeon Gold processors, code name Skylake, with 40 cores each running at 2,4 GHz (max turbo frequency 3.7GHz). Additionally there are two GPU computing nodes equipped with 4xA100 (40GB) adapters. The nodes are connected with a 100 Gbps Omni-Path network. The login node act also as a file server to the computing nodes. Also there is one GPU-node for the GPU-workloads.

Login node

- Model: Dell R740XD

- CPU: 2 x Intel Xeon Gold 6130 (32 Cores/64 Threads)

- Memory: 376 GB

- address: sampo.uef.fi

Compute nodes

- 4x Dell C6420 (sampo[1-4])

- CPU: 2 x Intel Xeon Gold 6148 (40 Cores / 80 Threads)

- Memory:

- 3 Nodes 376 GB

- 1 Nodes 768 GB

- LOCAL DISK (/scratch): 300 GB SSD

- 2x Lenovo SR670 v2 (sampo[5-6])

- GPU: 4x NVIDIA A100 40 GB

- CPU: Intel Xeon Gold 6326 (32 Cores / 64 Threads)

- RAM: 512 GB

- LOCAL DISK (/scratch): 1.6 TB NVME

Paths

Additionally to the UEF IT Services Research Storage the cluster has its own local storage. There are no backups of the local storage so keep your important data on UEF IT Services Research Storage Space. Also in the future all old files (older than 2 months) will be automatically removed from group folders.

You can access the sampo.uef.fi storage via SMB-protocol.

Research storage provided by the UEF IT Services is also connected to the login and computing nodes.

Cluster storage

- /home/users/username - 250 GB User home directory ($HOME)

- /home/groups/groupname - Minimum 5 TB User research group folder

Computing node local storage Each computing node has 300 GB of local storage (SSD storage). You can access the local disk with /tmp path

UEF IT Research Storage

- /research/users/user - User home directory at \\research.uefad.uef.fi

- /research/work/user - User work directory at \\research.uefad.uef.fi

- /research/groups/groupname - User research group directory at \\research.uefad.uef.fi

Applications

To see the list of terminal application visit the available applications web page.

Slurm Workload Manager

SlurmWorkload Manager is an open source Job scheduler that is intended to control background executed programs. These background executed programs are called Jobs. User defines the Job with various parameters that include run time, number of tasks (CPU cores), amount of required memory (RAM) and specify which program(s) to execute. These jobs are called batch jobs. (Batch) Jobs are submitted to common job queue (partition) that is shared by the other users and Slurm will execute the submitted jobs automatically in turn. After the job is completed (or error occurs) Slurm can optionally notify the user with email notification. Additionally to the batch jobs user can reserve compute node for interactive jobs where you wait for your turn in queue and on your turn you are put on your reserved node where you can execute commands. After the reserved time is over your sessions is terminated.

Slurm Partitions

- serial. 4 out of 4 nodes. Maximum run time 3 days

- longrun. 2 out of 4 nodes. Maximum run time 14 days

- parallel. 2 of 4 nodes. Maximum run time 3 days.

- gpu. 1 node. Maximum run time 3 days.

Explanation of the partitions

Compute nodes are grouped in multiple partitions and each partition can be considered as a job queue. Partitions can have multiple constraints and restrictions. For example access for certain partitions can be limited by the user/group or the maximum running time can restricted.

Serial partition is the default partition for all jobs that user submits. User can reserve maximum of 1 nodes for his/her job. Default run time is 5 minutes and maximum 3 days.

Longrun partition is for long running jobs and only one node is for this usage. Default run time 5 minutes and maximum 14 days.

Parallel partition is for parallel jobs that can span over multiple nodes (MPI jobs for example). User can reserve 2 nodes (minimum and maximum). Default run time is 5 minutes and maximum 3 days.

GPU partition is for the GPU jobs. User can can reserve 1 node and default runtime is 5 minutes and maximum 3 days.

Getting started

See Slurm usage instruction from Slurm Workload Manager.

System monitoring

You can also monitor the status of the sampo computing cluster by visiting https://sampo.uef.fi URL. From there you can find various graphs concerning the CPU utilization, memory, network or disk usage.